Instance Variable Performance

Jun 26, 2019 @ 8:14 amLet’s start today’s post with a weird Ruby benchmark:

require "benchmark/ips"

class Foo

def initialize forward

forward ? go_forward : go_backward

end

ivars = ("a".."zz").map { |name| "@#{name} = 5" }

# define the go_forward method

eval "def go_forward; #{ivars.join("; ")} end"

# define the go_backward method

eval "def go_backward; #{ivars.reverse.join("; ")} end"

end

# Heat

Foo.new true

Foo.new false

Benchmark.ips do |x|

x.report("backward") { 5000.times { Foo.new false } }

x.report("forward") { 5000.times { Foo.new true } }

end

This code defines a class that sets a bunch of instance variables, but the order

that the instance variables are set depends on the parameter passed in to the

constructor. When we pass true, it defines instance variables “a” through

“zz”, and when we pass false it defines them “zz” through “a”.

Here’s the result of the benchmark on my machine:

$ ruby weird_bench.rb

Warming up --------------------------------------

backward 3.000 i/100ms

forward 2.000 i/100ms

Calculating -------------------------------------

backward 38.491 (±10.4%) i/s - 192.000 in 5.042515s

forward 23.038 (± 8.7%) i/s - 114.000 in 5.004367s

For some reason, defining the instance variables backwards is faster than defining the instance variables forwards. In this post we’ll discuss why. But for now, just know that if you want performant code, always define your instance variables backwards (just kidding, don’t do that).

How Are Instance Variables Stored?

In Ruby (specifically MRI), object instances point at an array, and instance variables are stored in that array. Of course, we refer to instance variables by names, not by array indexes, so Ruby keeps a map of “names to indexes” which is stored on the class of the object.

Let’s say we have some code like this:

class Foo

def initialize

@a = "foo"

@b = "bar"

@c = "baz"

@d = "hoge"

end

end

Foo.new

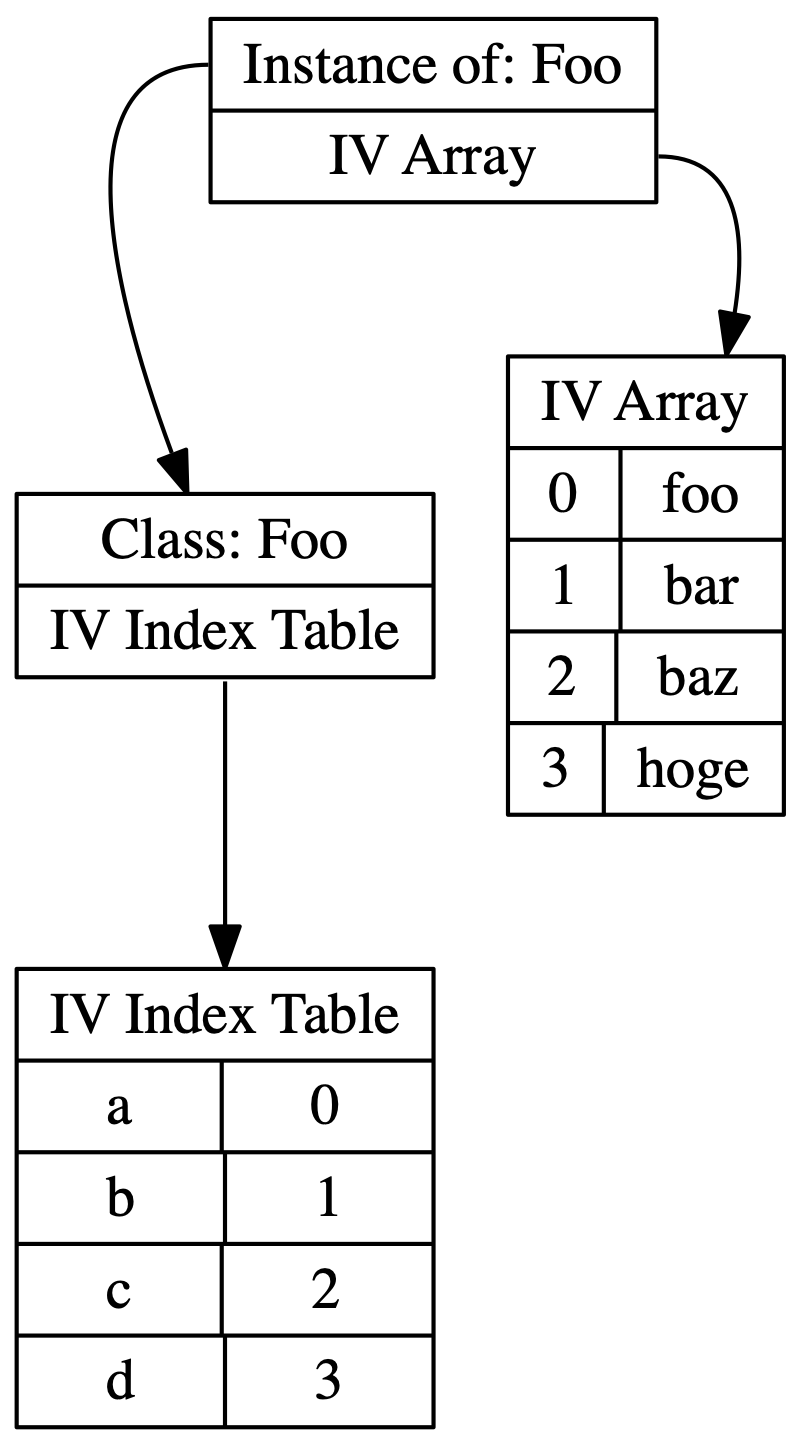

Internally, the object relationship will look something like this:

The class points at a map of “names to indexes” called the “IV Index Table”. The IV Index Table contains the names of the instance variables along with the index of where to find that instance variable.

The instance points at the class, and also points at an array that contains the actual values of the instance variables.

Why go to all this trouble to map instance variable names to array offsets? The reason is that it is much faster to access an array element than look up something from a hash. We do have to do a hash lookup to find the array element, but instance variables have their own inline cache, so the lookup doesn’t occur very often.

Setting Instance Variables in Slow Motion

I want to walk through exactly what happens when instance variables are set, but we’re going to do it twice. We’ll use the code below:

class Foo

def initialize

@a = "foo"

@b = "bar"

@c = "baz"

@d = "hoge"

end

end

Foo.new

Foo.new

Ruby creates the instance variable index table lazily, so it doesn’t actually

exist until the first time the code executes. The following GIF shows the

execution flow for the first time Foo.new is called:

The first time initialize is executed, the Foo class doesn’t have an

instance variable index table associated with it, so when the first instance

variable @a is set, we create a new index table, then set @a to be index 0,

then set the value “foo” in the instance variable array at index 0.

When we see instance variable @b, it doesn’t have an entry in the index table,

so we add a new entry that points to position 1, then set position 1 in the

array to “bar”.

This process repeats for each of the instance variables in the method.

Now lets look at what happens the second time we call Foo.new:

This time, the class already has an instance variable index table associated

with it. When the instance variable @a is set, it exists in the index table

with position 0, so we set “foo” to position 0 in the instance variable list.

When we see instance variable @b, it already has an entry in the index table

with position 1, so we set “bar” to position 1 in the instance variable list.

This process repeats for each of the variables in the method.

We can actually observe the lazy creation of the index table by using

ObjectSpace.memsize_of:

require "objspace"

class Foo

def initialize

@a = "foo"

@b = "bar"

@c = "baz"

@d = "hoge"

end

end

p ObjectSpace.memsize_of(Foo) # => 520

Foo.new

p ObjectSpace.memsize_of(Foo) # => 672

Foo.new

p ObjectSpace.memsize_of(Foo) # => 672

The size of Foo is smaller before we instantiate our first instance, but

remains the same size after subsequent allocations. Neat!

Lets do one more example, but with the following code:

class Foo

def initialize init_all

if init_all

@a = "foo"

@b = "bar"

@c = "baz"

@d = "hoge"

else

@c = "baz"

@d = "hoge"

end

end

end

Foo.new true

Foo.new false

After the first call of Foo.new true, the Foo class will have an instance

variable index table just like the previous examples. @a will be associated

with position 0, @b with position 1, and so on. But what happens on the

second allocation at Foo.new false?

In this case, we already have an index table associated with the class, but @c

is associated with position 2 in the instance variable array, so we have to

expand the array leaving position 0 and 1 unset (internally Ruby sets them to

Qundef). Then @d is associated with position 3, and it is set as usual.

The important part about this is that instance variable lists must expand to the width required for the index offset. Now lets talk about how the list expands.

Instance Variable List Allocation and Expansion

We saw how the instance variable index table is created. Now I want to spend some time focusing on the instance variable list. This list is associated with the instance and stores references to our actual instance variable values.

This list is lazily allocated and expands as it needs to accommodate more values. Here is the code that figures out by how much the array should grow.

I’ve translated that function to Ruby code and added a few more comments:

def iv_index_tbl_newsize(ivup)

index = ivup.index

newsize = (index + 1) + (index + 1)/4 # (index + 1) * 1.25

# if the index table *wasn't* extended, then clamp the newsize down to

# the size of the index table. Otherwise, use a size 25% larger than

# the requested index

if !ivup.iv_extended && ivup.index_table.size < newsize

ivup.index_table.size

else

newsize

end

end

IVarUpdate = Struct.new(:index, :iv_extended, :index_table)

index_table = { a: 0, b: 1, c: 2, d: 3 } # table from our examples

# We're setting `@c`, which has an index of 2. `false` means we didn't mutate

# the index table.

p iv_index_tbl_newsize(IVarUpdate.new(index_table[:c], false, index_table))

The return value of iv_index_tbl_newsize is used to determine how much memory

we need for the instance variable array. As you can see, its return value is

based on the index of the instance variable, and we got that index from the

index table.

If the index table was mutated, then we’ll allow the instance variable list to grow without bounds. But if the index table was not mutated, then we clamp the array size to the size of the index table.

This means that the first time we allocate a particular Ruby object, it can be

larger than subsequent allocations. Again, we can use

ObjectSpace.memsize_of to observe this behavior:

require "objspace"

class Foo

def initialize

@a = "foo"

@b = "bar"

@c = "baz"

@d = "hoge"

end

end

p ObjectSpace.memsize_of(Foo.new) # => 80

p ObjectSpace.memsize_of(Foo.new) # => 72

p ObjectSpace.memsize_of(Foo.new) # => 72

The first allocation is larger because it’s the first time we’ve “seen” these instance variables. The subsequent allocations are smaller because Ruby clamps the instance variable array size.

Watching the Instance Variable Array Grow

Let’s do one more experiment before we get on to why the initial benchmark behaves

the way it does. Here we’re going to watch the size of the object grow as we

add instance variables (again, using ObjectSpace.memsize_of):

require "objspace"

class Foo

def initialize

@a = 1

p ObjectSpace.memsize_of(self)

@b = 1

p ObjectSpace.memsize_of(self)

@c = 1

p ObjectSpace.memsize_of(self)

@d = 1

p ObjectSpace.memsize_of(self)

@e = 1

p ObjectSpace.memsize_of(self)

@f = 1

p ObjectSpace.memsize_of(self)

@g = 1

p ObjectSpace.memsize_of(self)

@h = 1

p ObjectSpace.memsize_of(self)

end

end

puts "First"

Foo.new

puts "Second"

Foo.new

Here’s the output from the program:

$ ruby ~/thing.rb

First

40

40

40

80

80

96

96

120

Second

40

40

40

80

80

96

96

104

You can see that as we add instance variables to the object, the object gets bigger! Let’s make one change to the benchmark and run it again. This time we’ll add an option that lets us define the “last” instance variable first:

require "objspace"

class Foo

def initialize eager_h

if eager_h

@h = 1

end

@a = 1

p ObjectSpace.memsize_of(self)

@b = 1

p ObjectSpace.memsize_of(self)

@c = 1

p ObjectSpace.memsize_of(self)

@d = 1

p ObjectSpace.memsize_of(self)

@e = 1

p ObjectSpace.memsize_of(self)

@f = 1

p ObjectSpace.memsize_of(self)

@g = 1

p ObjectSpace.memsize_of(self)

@h = 1

p ObjectSpace.memsize_of(self)

end

end

puts "First"

Foo.new false

puts "Second"

Foo.new true

Here’s the output:

$ ruby ~/thing.rb

First

40

40

40

80

80

96

96

120

Second

104

104

104

104

104

104

104

104

On the first allocation, we can observe the size of the object gradually expand

as usual. However, on the second allocation, we ask it to eagerly set @h and

the growth pattern is totally different. In fact, it doesn’t grow at all!

Since @h is last in our index table, Ruby immediately expands the array list

in order to set the value for the @h slot. Since the instance variable array

is now at maximum capacity, none of the subsequent instance variable sets need

the array to expand.

Back To Our Initial Benchmark

Every time Ruby needs to expand the instance variable array, it requires calling

realloc in order to expand that chunk of memory. We can observe calls to

realloc using dtrace.

class Foo

def initialize forward

forward ? go_forward : go_backward

end

ivars = ("a".."zz").map { |name| "@#{name} = 5" }

# define the go_forward method

eval "def go_forward; #{ivars.join("; ")} end"

# define the go_backward method

eval "def go_backward; #{ivars.reverse.join("; ")} end"

end

# Heat

Foo.new true

if ARGV[0]

1000.times { Foo.new false }

else

1000.times { Foo.new true }

end

Here I’ve rewritten the benchmark so that we can control the direction via an

environment variable. Let’s use dtrace to measure the number of calls to

realloc in both situations.

This case is always going forward:

$ sudo dtrace -q -n 'pid$target::realloc:entry { @ = count(); }' -c "/Users/aaron/.rbenv/versions/ruby-trunk/bin/ruby thing.rb"

dtrace: system integrity protection is on, some features will not be available

8369

This case is forward once, then reverse the rest of the time:

$ sudo dtrace -q -n 'pid$target::realloc:entry { @ = count(); }' -c "/Users/aaron/.rbenv/versions/ruby-trunk/bin/ruby thing.rb reverse"

dtrace: system integrity protection is on, some features will not be available

4369

We can see that “starting from the end” decreases the number of calls to

realloc significantly. These increased calls to realloc are why it’s faster

to define our instance variables forward once, then backward the rest of the

time!

I hope this was an interesting article. Please have a good day!